Enabling trust in network services through secure, stable, and transparent internets

Published on Thu 25 April 2019

1SIDN/SIDN Labs, 2University of Amsterdam, 3University of Twente, 4SURFnet, 5NLnet Labs

We recently launched 2STiC, a joint research program to develop and experiment with mechanisms to increase the security, stability, and transparency of internet communications, both using emerging internet designs such as NDN, SCION, and RINA as well as the current Internet. Our long-term objective is to establish a national center of expertise that helps putting the Dutch (and European) networking communities in a leading position in this field. In this blog, we provide an overview of the 2STiC program, for instance in terms of its goal, motivation, timeliness, and research topics.

There’s more than one internet?

Yes. An internet joins multiple autonomously operated computer networks into one logical network, thus enabling users to exchange data between any two machines across these networks (hence the term inter-network or internet for short). The Internet as we know it today (with a capital I) is an example of an internet (lower case i) and uses core protocols like IP, TCP, BGP, and the DNS to interconnect over 60.000 networks worldwide. Other examples of internets include X.25 and CYCLADES (both predating the Internet) and new experimental systems such as SCION, NDN, and RINA.

Security, stability, and transparency are a problem because…?

Because the Internet was not designed to support 21st century applications. For example, the original designers of the Internet didn’t anticipate users wanting to connect “things” to the net that act upon people’s physical space, such as delivery drones, swarms of robots, self-driving cars, remotely operated surgical equipment, and connected door locks. With today’s Internet, this is a risk because security incidents such as a DDoS attack or a routing hijack may disrupt a thing’s network connection and as a result jeopardize people’s safety. In addition, if this happens, the Internet currently cannot efficiently reach the thing through a standby path because it does not support IP-level multi-path network connections.

Another development that the original Internet designers couldn’t foresee is that users would like to get more insight in and control over who receives data about them (e.g., collected by sensors, websites, and apps) and how it flows through the network. An example is a connected thermostat that shares temperature (and thus presence) information with remote services, who may additionally reside in a non-European jurisdiction. A similar example is the experiment of the Firefox browser to resolve domain names through a few large resolver operators instead of through a user’s local resolver (in their network or at their ISP) [3]. If this becomes default behavior, then users lose control over who handles their DNS queries and the public DNS runs the risk of being dominated by a few large corporations (centralization). Examples outside the consumer domain are medical and financial institutions who want to be able to verify the path their transaction data takes through the network.

The reason the Internet does not handle these types of applications very well is not because it’s “broken” as some claim, but rather that the problem that the Internet aimed to solve has changed, as Internet Hall of Famer Van Jacobson already pointed out in his 2006 lecture “A New Way to look at Networking” (as of around 37:00). The problem of the early days (1970s) was how to enable university researchers to share expensive computer hardware via a network, which later evolved into how to make computer networking ubiquitously available for everyone [1]. The Internet solved both problems beyond imagination, but its success also introduced new problems because it isn’t designed for newer types of usages with new security, stability, and transparency requirements.

So, now what?

In our opinion, the challenge we are facing is to fulfill these new requirements through a more diverse networking environment [1][2] in which other types of internets (e.g., based on SCION or RINA) complement and co-exist with the current Internet. This is a departure from today’s approach to continually “patch” one internet (the Internet) to support all new types of applications, which results in an increasingly complex architecture. Instead, we believe the sustainable way forward is to develop and spin up new networks when new classes of applications emerge that other internets can’t handle well. For example, critical applications such as remote surgery, intelligent transport systems, and financial transactions might want to control inter-network data paths and cryptographically verify the trustworthiness of both the routers and hops on the path (e.g., their vendors, types, and geolocation). An emerging internet architecture like SCION partly supports such new networking concepts by design, but they will be very difficult to fold into the IP architecture because BGP is designed for hop-based routing and does not consider the attributes of the network elements on the path. For other network functions it may be easier to incrementally incorporate them into the IP architecture, such as secure routing through BGPsec and RPKI.

Ultimately, we envision that applications will be able to select the internet that best meets their communication requirements, for instance in terms of the levels of security, resilience, and transparency those networks offer. This helps better aligning service properties with the expectations of users and societies, for instance in terms of higher-level notions such as self-determination and trust. For example, network functions like transparency, verifiability, and control over network paths are important tools to preserve users’ trust in networked services and to enable societies to stay in control of the digital infrastructure they depend upon (self-determination).

While all this may sound like a daunting undertaking, the networking community does have almost 50 years of experience in operating and improving the Internet, which we can leverage to rethink the requirements and design of new complementary internets. For example, the NDN internet architecture reuses several of the Internet’s core architectural principles such as its “thin waist” and relatively simple core protocols, even though it is based on the radically different paradigm of content-centric networking (rather than the Internet’s model of host-centric networking) and specifically focuses on secure content distribution applications.

Example: urban delivery drones

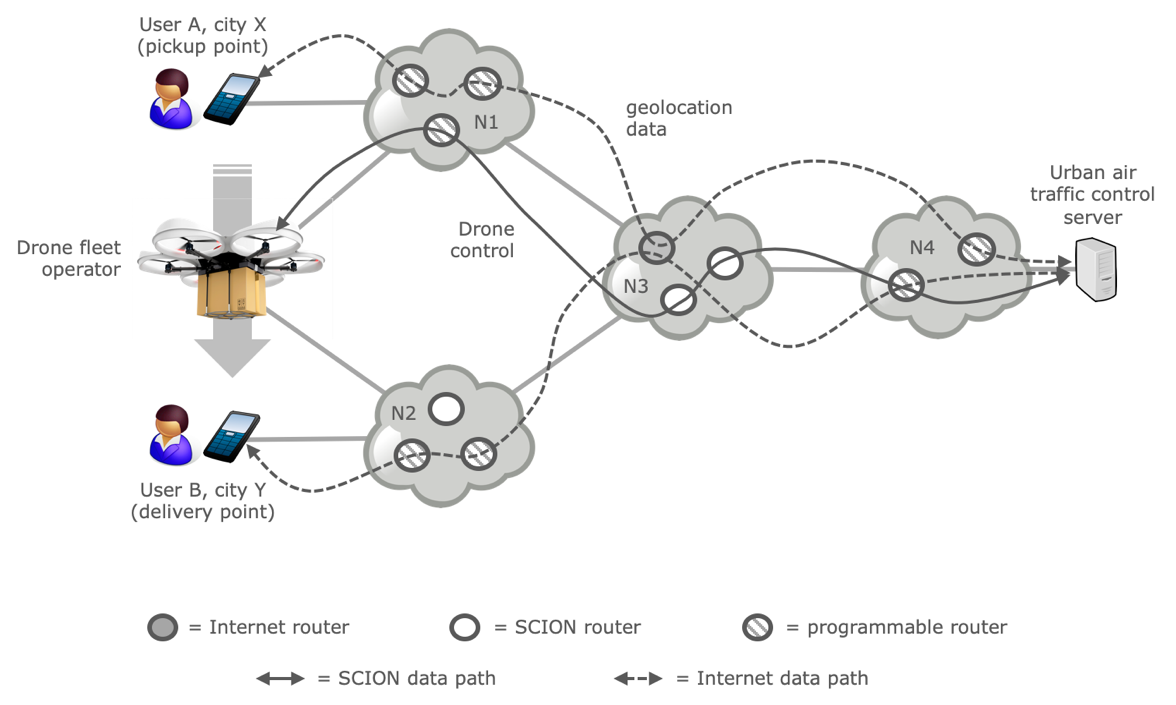

Figure 1 shows an example of the multi-internet environment that we envision. The application is a future urban transport system that enables users to send each other packages to and from their current locations using a delivery drone. User A requests a drone from a drone fleet operator, which instructs it to fly to user A. The drone registers with an “urban air traffic control” service, which guides it across urban areas, providing it with real-time information on flight paths of other drones to avoid mid-air collisions. User A attaches the package to the drone and instructs it to fly to user B, again under guidance of the urban air traffic control service.

Figure 1. Example use of a diversified internet environment.

In the example of Figure 1, users A and B use the Internet to signal their locations to the air traffic control service, but the control service interacts with the drone through a SCION network. The latter for instance provides increased protection against hijacks on the drone’s address space and enables the air traffic control service to only communicate with the drone via networks that the control service trusts. This is important to avoid the drone becoming unreachable when it flies to A or B, which may affect the safety of people on the ground. The networks in Figure 1 (N1 through N4) use a mix of IP routers, SCION routers, and programmable routers with the latter supporting both IP and SCION data plane protocols on the same hardware.

New research program

To address the above challenges, we recently set up a new joint research program called 2STiC (pronounced “to stick”), which is short for Security, Stability, and Transparency in inter-network Communication. Our goal is to develop and evaluate mechanisms to increase the security, stability, and transparency of internet communications, for instance by experimenting with and contributing to emerging internet architectures such as SCION, NDN, and RINA as well as the existing IP-based Internet. Our long-term objective is to establish a center of expertise in the field of trusted and resilient internets and help putting the Dutch (and European) networking communities in a leading position in the field.

Our work focuses on developing and evaluating diversified internet environments to meet the security, resilience, and transparency requirements of modern distributed applications, such as the real-time drone example of Figure 1. We concentrate on systems and protocols at the network-level and on deploying them using open programmable networks. We will use specific “vertical” services (e.g., intelligent transport systems or e-health services) to demonstrate the properties of the underlying internets for immediate and real-world problems. The advantage for operators of such services is that they get an increased level of security, resilience, and transparency “built into” the network and no longer need to operate (costly) leased lines, dedicated network equipment, or build custom functions into applications.

2STiC follows a hands-on approach based on measurements, running code, a national P4-programmable network, experiments, and demos. We aim to team up with similar research initiatives in Europe and elsewhere, as well as with standardization bodies such as the IRTF (e.g., the Path Aware Networking research group), the IETF (e.g., their Remote ATtestation ProcedureS working group), and ETSI. We will actively share our work and engage with the Dutch, European, and worldwide academic and operational communities as well as with policy makers and the broader public, for instance through technical reports, papers, and open source software.

Our consortium currently consists of 5 partners (NLnet Labs, SIDN, SURFnet, the University of Amsterdam, and the University of Twente), but we’d also like other partners to join 2STiC, such as universities, research labs, network operators, and service providers for specific “vertical” use cases.

Gaining momentum through programmable networks

The reason we started with 2STiC now is because of two recent developments. First, we believe that in the next few years it will become feasible for new types of internets to leave the lab or testbed phase and go to actual deployment. This is because open programmable network equipment is becoming commercially available, which enables engineers to flexibly program switching hardware with their own packet processing functions (e.g., for SCION or NDN traffic). For example, we are working on an implementation of the SCION protocols in P4 [4], which is a domain specific language for programming chipsets such as the Barefoot Tofino.

Ultimately, programmable networks may bring us a shared router hardware substrate and multiple virtualized internets on top, similar to how we virtualized servers as virtual machines.

More momentum: increased societal relevance

The second reason for spinning-up 2STiC now is that the topic of security, stability, and transparency of networked services is gaining more interest from society. For example, news articles in the Dutch main stream media discuss Europe losing control of their digital infrastructure to a few large corporations, for instance in the field of artificial intelligence or IT in general. Similarly, people worry about losing control of who handles their data, which essentially boils down to the discrepancy between the European model of handling user data (users decide and enforce how to use their data) versus how the Americans and Chinese do it (corporations and the state decide and enforce, respectively), as prof. José van Dijck recently pointed out in a lecture (as of 27:00). At the same time, critical services (e.g., online banking and heating systems) are being disrupted by people buying DDoS attacks for 40 Euros and regimes around the world use the Internet as a way to control their citizens.

We believe these two developments make 2STiC a highly timely and relevant program that is driven by both technological as well as societal developments.

What are you guys going to look into?

Specific topics we’ll be focusing on include:

-

Path awareness and control: what mechanisms are necessary to give users and applications more control over and insight into how their traffic travels through a multi-domain environment in a scalable way? For example, how can we enable users to decide on a network path and provide guarantees that the network enforces these decisions?

-

Attestation of (parts of) network equipment: how to gain (cryptographically) verifiable proof about properties of a network equipment, such as jurisdictions involved, equipment manufacturer, and communication requirements?

-

Naming and addressing: how can naming and addressing schemes of internets improve the security, stability and transparency? For example, can we increase security by limiting the scope of a name to a particular context/subnetwork, as opposed to the global names we currently have with the public DNS?

-

Evaluation of individual internet architectures: to what degree do emerging architectures such as SCION, NDN, and RINA contribute to increasing the security, stability, and transparency of internets compared to IP-based networks and for which types of applications?

-

Autonomous management: how can we enhance individual internets with autonomous self-repair capabilities to handle faults (e.g., human error or failing hardware) in a fail-safe manner and at scale [5], thus increasing internet resilience and security? This minimizes the amount of manual management effort, which reduces the probability of errors and costs for network operators. Also, what network management abstractions does this require for operators?

-

System architectures: what kind of system architectures (hosts, routers, switches) do we need to enable multiple internets to share the same programmable hardware? For example, how do new transport protocols (e.g., NDN’s or RINA’s) work with TCP and its way of congestion control if they share the same (wireless) link? Also, does the control plane need to be physically isolated from the data plane?

-

Evaluation of open programmable networks: how well do technologies such as P4 (data plane) and Software Defined Networking (separation of control plane) work as mechanisms to diversify our network environment, for instance in terms of security and scalability? Also, do programmable switches need to limit the number of data planes they support to avoid an unbridled growth of internet types?

Next steps

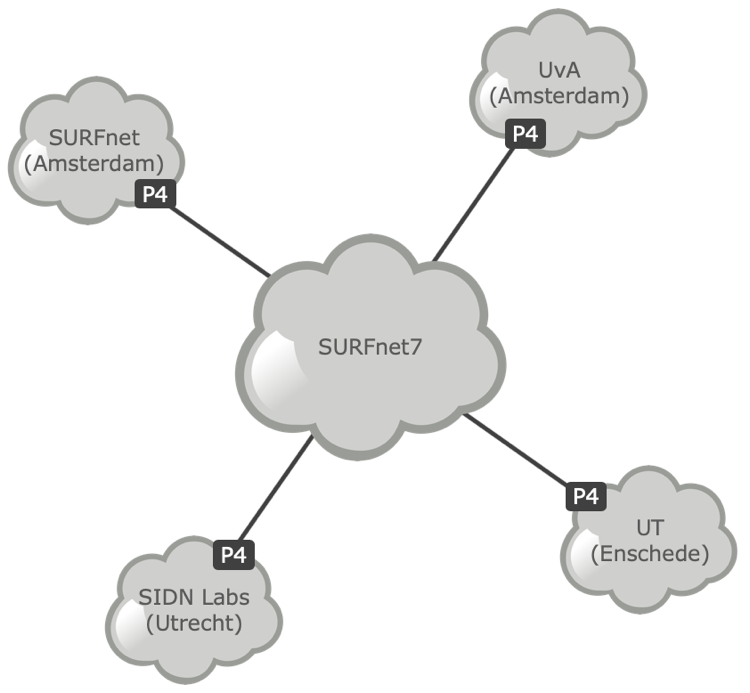

Our next steps are to expand the setup of the 2STiC testbed and to set up P4 switches at the project partners (see Figure 2). We will also experiment with the SCION-based experimental internet that we recently connected to, for instance by expressing the SCION core protocols in P4 so we can run them on our 2STiC testbed. In the future, we’ll also extend our work to other types of internets, such as NDN and RINA.

The first application we’ll be using is In-band Network Telemetry (INT), but we’ll also be engaging with experts from specific verticals (e.g., e-health and intelligent transport domains) to experiment with services relevant to them on our testbed.

Figure 2. 2STiC’s P4 testbed (simplified).

Finally, we’ll be running a “Birds of a Feather” (BoF) session at TNC19 (a conference for national research and educational networks) and we plan to submit several project proposals to get part of the work co-funded, for instance through H2020 or the National Cybersecurity Research Agenda (NCSRA).

Feedback? Let us know!

As usual, we very much welcome your feedback. We’d be particularly interested in talking with you if you’re a service provider in need of more secure, resilient, and transparent internet communications. Feel free to drop us an email at caspar.schutijser@sidn.nl.

Acknowledgements

We thank Luuk Hendriks (University of Twente) and Simon Hania (SIDN supervisory board) for their feedback on the draft of this blog.

References

-

M. Ammar, “Ex uno pluria: The Service-Infrastructure Cycle, Ossification, and the Fragmentation of the Internet”, ACM SIGCOMM Computer Communication Review, Vol. 48, Issue 1, January 2018, https://ccronline.sigcomm.org/2018/ccr-january-2018/ex-uno-pluria-the-service-infrastructure-cycle-ossification-and-the-fragmentation-of-the-internet/

-

J. S. Turner and D. E. Taylor, “Diversifying the Internet”, Proc. IEEE GLOBECOM, 2005

-

B. Hubert, “Opinion: DNS privacy debate”, blog, Feb 2019, https://blog.apnic.net/2019/02/08/opinion-dns-privacy-debate/

-

P. Bossharty, D. Daly, G. Gibby, M. Izzardy, N. McKeownz, J. Rexford, C. Schlesinger, D. Talaycoy, A. Vahdat, G. Varghesex, and D. Walker, “P4: Programming Protocol-Independent Packet Processors”, ACM SIGCOMM Computer Communication Review, Volume 44, Issue 3, July 2014, pp. 87-95

-

D. Clark, C. Partridge, J.C. Ramming, and J.T. Wroclawski, “A Knowledge Plane for the Internet”, SIGCOMM’03, August 25–29, 2003, Karlsruhe, Germany

1 Kees Neggers is a member of SIDN’s supervisory board and inductee of the Internet Hall of Fame.